Humane Ingenuity #3: AI in the Archives

by Dan Cohen

Welcome back to Humane Ingenuity. It’s been gratifying to see this newsletter quickly pick up an audience, and to get some initial feedback from readers like you that can help shape forthcoming issues. Just hit reply to this message if you want to respond or throw a link or article or book my way. (Today’s issue, in fact, includes a nod to a forthcoming essay from one of HI’s early subscribers.)

OK, onto today’s theme: what can artificial intelligence do in a helpful way in archives and special collections? And what does this case study tell us more generally about an ethical and culturally useful interaction between AI and human beings?

Crowdsourcing to Cloudsourcing?

Over a decade ago, during the heady days of “Web 2.0,” with its emphasis on a more interactive, dynamic web through user sharing and tags, a cohort of American museums developed a platform for the general public to describe art collections using more common, vernacular words than the terms used by art historians and curators. The hope was that this folksonomy, that riffy portmanteau on the official taxonomy of the museum, would provide new pathways into art collections and better search tools for those who were not museum professionals.

The project created a platform, Steve, that had some intriguing results when museums like the Indianapolis Museum of Art and the Metropolitan Museum of Art pushed web surfers to add their own tags to thousands of artworks.

Some paintings received dozens of descriptive tags, with many of them straying far from the rigorous controlled vocabularies and formal metadata schema we normally see in the library and museum world. (If this is your first time hearing about the Steve.museum initiative, I recommend starting with Jennifer Trant’s 2006 concept paper in New Review of Hypermedia and Multimedia, “Exploring the Potential for Social Tagging and Folksonomy in Art Museums: Proof of Concept” (PDF))

(Official museum description, top; most popular public tags added through Steve, bottom)

(Official museum description, top; most popular public tags added through Steve, bottom)

Want to find all of the paintings with sharks or black dresses or ice or wheat? You could do that with Steve, but not with the conventional museum search tools. Descriptors like “Post-Impressionism” and “genre painting” were nowhere to be seen. As Susan Chun of the Met shrewdly noted about what the project revealed: “There’s a huge semantic gap between museums and the public.”

I’ve been thinking about this semantic gap recently with respect to new AI tools that have the potential to automatically generate descriptions and tags—at least in a rough way, like the visitors to the Met—for the collections in museums, libraries, and archives. Cloud services from Google, Microsoft, Amazon, and others can do large-scale and fine-grained image analysis now. But how good are these services, and are the descriptions and tags they provide closer to crowdsourced words and phrases or professional metadata? Will they simply help us find all of the paintings with sharks—not necessarily a bad thing, as the public has clearly shown an interest in such things—or can they—should they—supplement or even replace the activity of librarians, archivists, and curators? Or is the semantic gap too great and the labor issues too problematic?

Bringing the Morgue to Life

Pilots using machine vision to interpret the contents of historical photography collections are already happening. Perhaps most notably, the New York Times and Google inked a deal last year to have Google’s AI and machine learning technology provide better search tools for their gigantic photo morgue. The Times has uploaded digital scans of their photos to Google’s cloud storage—crucially, the fronts and backs of the photos, since the backs have considerable additional contextual data—and then Google uses their Cloud Vision API and Cloud Natural Language API to extract information about each photo.

Unfortunately, we don’t have access to the data being produced from the Times/Google collaboration. Currently it is being used behind the scenes to locate photos for some nice slide shows on the history of New York City. But we can assume that the Times gets considerable benefit from the computational processing that is happening. As Google’s marketing team emphasizes, “the digital text transcription isn’t perfect, but it’s faster and more cost effective than alternatives for processing millions of images.”

Unspoken here are the “alternatives,” by which they clearly mean processes involving humans and human labor. These new AI/ML techniques may not be perfect (yet? no, likely ever, see “Post-Impressionism”), but they have a strong claim to the “perfect is the enemy of the good” school of cultural heritage processing. There’s a not-so-subtle nudge from Google: Hey archivists, you wanna process millions of photos by hand, with dozens of people you have to hire writing descriptions of what’s in the photos and transcribing the backs of them too? Or do you want it done tomorrow by our giant computers?

As Clifford Lynch writes in a forthcoming essay (which I will link to once it’s published), “[Machine learning applications] will substantially improve the ability to process and provide access to digital collections, which has historically been greatly limited by a shortage of expert human labor. But this will be at the cost of accepting quality and consistency that will often fall short of what human experts have been able to offer when available.”

This problem is very much in the forefront of my mind, as the Northeastern University Library recently acquired the morgue of the Boston Globe, which contains the original prints of over one million photographs that were used in the paper, as well as over five million negatives, most of which have never been seen beyond the photo editing room at the Globe. It’s incredibly exciting to think about digitizing, making searchable, and presenting this material in new ways—we have the potential to be a publicly accessible version of the NYT/Google collaboration.

But we also face the difficult issue of enormous scale. It’s a vast collection. Along with the rest of the morgue, which includes thousands of boxes of clippings, topical files, and much else, we have filled a significant portion of the archives and special collections storage in Snell Library.

Fortunately the negative sleeves have some helpful descriptive metadata, which could be transcribed by humans fairly readily and applied to the 20-40 photos in each envelope. But what’s really going on in each negative, in detail? Who or what appears? That’s a hard and expensive problem. (Insert GIF of Larry Page slowly turning toward the camera and laughing like a Bond villain.)

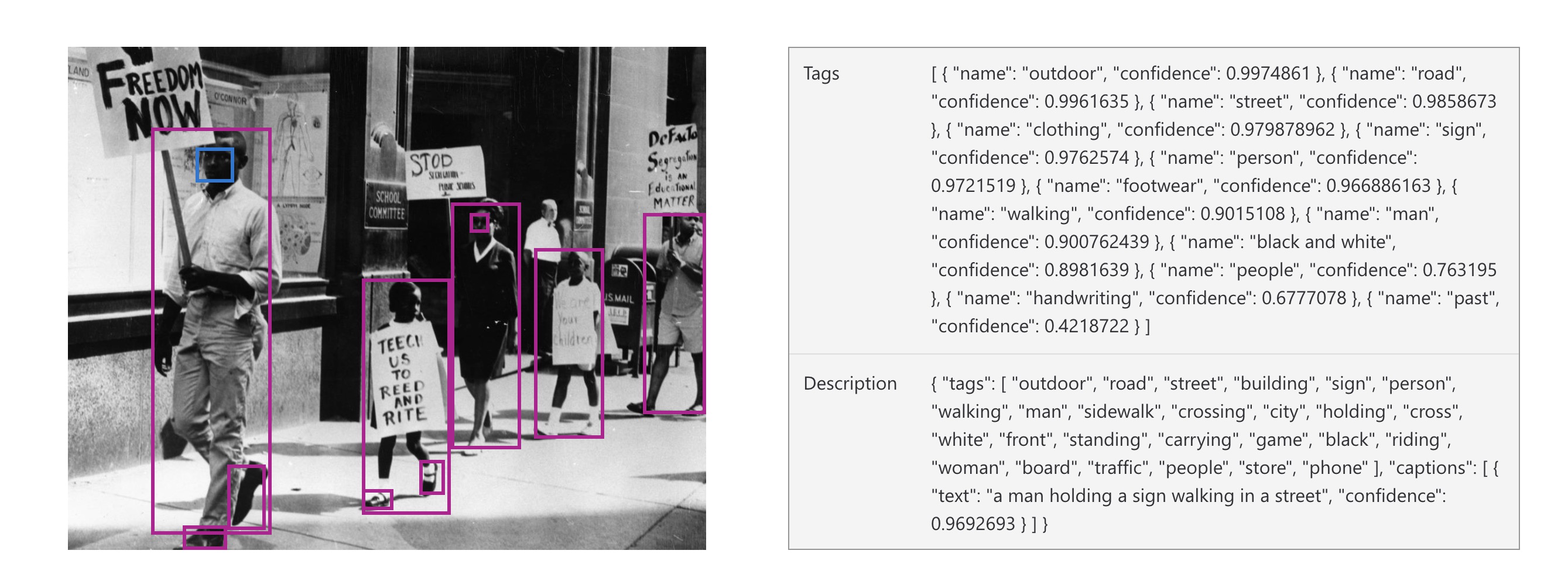

I have started to test several machine vision APIs, and it’s interesting to note the different approaches and strengths of each service. Here’s a scan of a negative from our Globe archive, of a protest during the upheaval of Boston’s school desegregation and busing era, uploaded to Google’s Cloud Vision API (top) and Microsoft’s Computer Vision API (bottom).

I’ll return to these tests in a future issue of HI, as I am still experimenting, but some impressive things are happening here, with multiple tabs showing different analyzes of the photograph. Both services are able to identify common entities and objects and any text that appears. They also do a good job analyzing the photo as a set of pixels—its tones and composition, which can be helpful if, say, we don’t want to spend time examining a washed out or blurry shot.

There are also creepy things happening here, as each service has a special set of tools around faces that appear. As Lynch notes, “The appropriateness of applying facial recognition [to library materials] will be a subject of major debate in coming years; this is already a very real issue in the social media context, and it will spread to archives and special collections.”

Google, in a way that is especially eyebrow-raising, also connects its Cloud Vision API to its web database, and so was able to figure out the historical context of this test photograph rather precisely (shown in the screen shot, above). Microsoft synthesizes all of the objects it can identify into a pretty good stab at a caption: “A man holding a sign walking in a street.” For those who want to make their collections roughly searchable (and just as important, provide accessibility for those with visual impairments through good-enough textual equivalents for images), a quick caption service like this is attractive. And it assigns that caption a probability: a very confident score, in this case, of 96.9%.

In the spirit of Humane Ingenuity, we should recognize that this is not an either/or situation, a binary choice between human specialists and vision bots. We can easily imagine, for instance, a “human in the loop” scenario in which the automata provide educated guesses and a professionals in libraries, archives, and museums provide knowledgeable assessment, and make the final choices of descriptions to use and display to the public. Humans can also choose to strip the data of facial recognition or other forms of identity and personal information, based on ethics, not machine logic.

In short, if we are going to let AI loose in the archive, we need to start working on processes and technological/human integrations that are the most advantageous combinations of bots and brains. And this holds true outside of the archive as well.

Neural Neighbors

The numerical scores produced by the computer can also relate objects in vast collections in ways that help humans do visual rather than textual searches. Doug Duhaime, Monica Ong Reed, and Peter Leonard of Yale’s Digital Humanities Lab ran 27,000 images from the nineteenth-century Meserve-Kunhardt Collection (one of the largest collections of 19th-century photography) at the Beinecke Rare Book and Manuscript Library through a neural network, which then clustered the images into categories through 2,048 ways of “seeing.” The math and database could then be used to produce a map of the collection as a set of continents and archipelagos of similar and distant photographs.

Through this new interface, you can zoom into one section (at about 100x, below) to find all of the photographs of boxers in a cluster.

The Neural Neighbors project reveals the similarity scores in another prototype search tool. As David Leonard noted in a presentation (PDF) of this work at a meeting of the Coalition for Networked Information, much of the action is happening in the penultimate stage of the process, when those scores emerge. And that is also where an archivist can step in and take the reins back from the computer, to transform the mathematics into more rigorous categories of description, or to dream up a new user interface for accessing the collection.

(See also: John Resig’s pioneering work in this area: “Ukiyo-e.org: Aggregating and Analyzing Digitized Japanese Woodblock Prints.”)

Some Fun with Image Pathways

Liz Gallagher, a data scientist at the Wellcome Trust, recently used similar methods to the Neural Neighbors project on the Welcome’s large collection of digitized works related to health and medical history.

Then, using Google’s X Degrees of Separation code, she created pathways of hidden connections between dissimilar images, tracing a set of hops between more closely related images to get there one step at a time, from left to right in each row.

Each adjacent image is fairly similar according to the computer, but the ends of the rows are not. And some of the hops, especially in the middle, are fairly amusing and even a bit poetic?